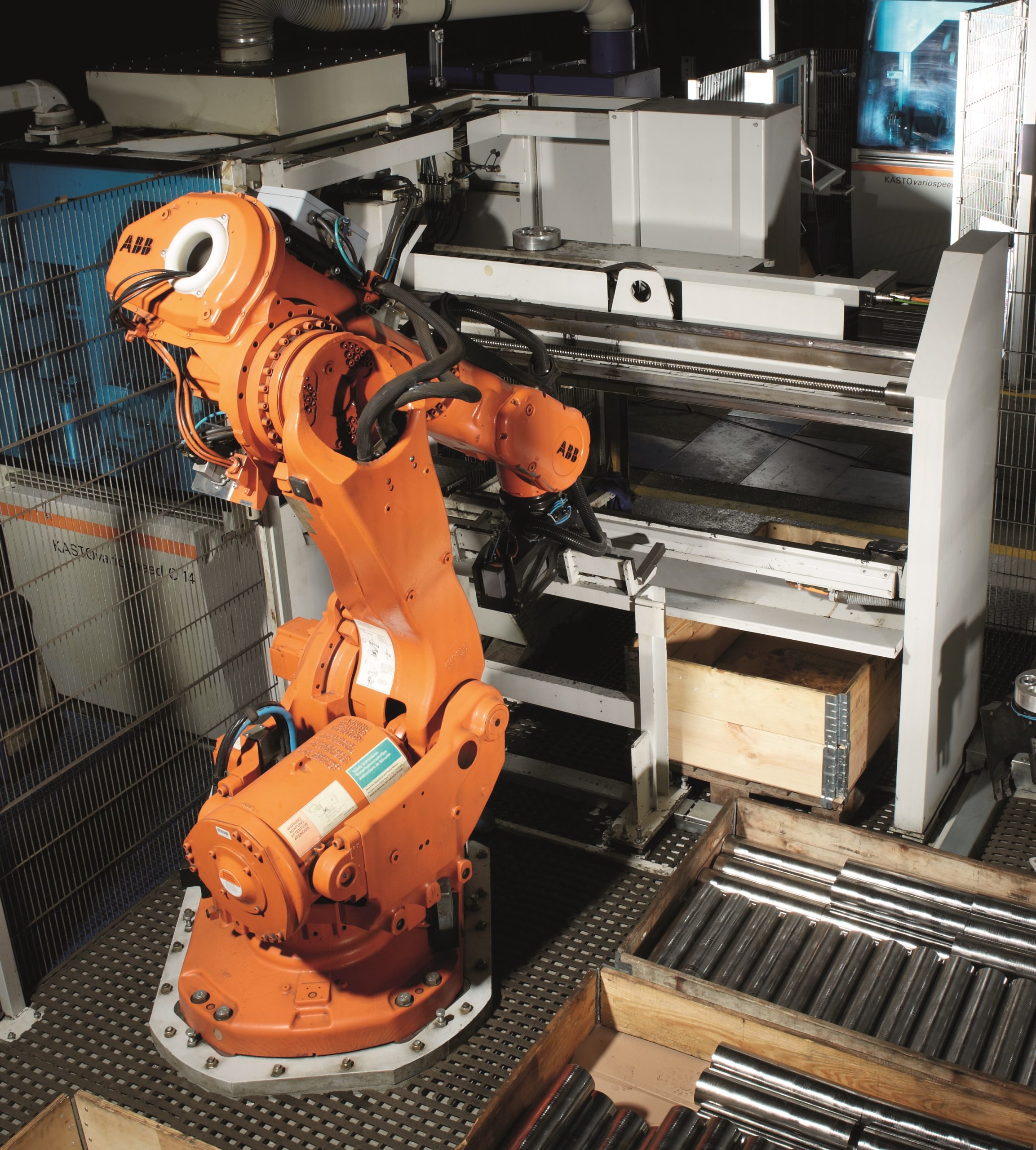

I first learned of OpenCV during a meeting with Jeff Bier, founder of the Embedded Vision Alliance (some of my conversation with Jeff is captured in my post \“Computer Vision: An Opportunity or Threat?\“). It was Jeff\’s de-scription of the types of content presented at the Embedded Vision Alliance Summit that led me to learn more about OpenCV and what it could mean for the future of industrial robotics through a presentation by OpenCV\’s president and CEO Gary Bradski (who is also a senior scientist at Willow Garage). The routines offered via OpenCV are focused on real time image processing and 2D and 3D computer vision. The routine library offers versions for Linux, Windows, Mac, Android and iOS and can be programmed with C++, C, C#, Java, Matlab, and Python. While watching the presentation by Bradski, delivered at a recent Embedded Vision Alliance Summit, I learned that OpenCV algorithm modules are particularly useful in industrial robotic applications because they address relevant issues such as image processing, calibration, object recognition/machine learning, segmentation, optical flow tracking and more. Noting that the paradigm of industrial robotic application is welding, Bradski points out that in such applica-tions robots are essentially blind, dumb and repetitive, albeit highly accurate. Because these welding robots typically lack vision, the products to be welded (usually cars) have to be staged and moved into the robotic welding area with incredible accuracy, which requires a lot of planning and orchestration. \“Programmed with ladder logic, these (welding) applications are a throwback to 1980s robotics technology,\“ says Bradski, which means it is mostly used in applications where there is a high margin, and the products are rigid and have a long shelf life (which is why industrial robotics have such a foothold in the automotive industry). \“Products like cell phones are typically assembled by hand because it takes so long to fixture a robotic line for cell phone assembly. By the time you had the line set up, you\’d be on to the next version of the cell phone.\“ Robotics 2.0, in Bradski\’s parlance, deals with robots being able to more easily handle greater levels of var-iance. To make this jump to Robotics 2.0, robots must be able to see (ideally in 3D), should be controlled by PCs rather than PLCs for direct wiring into motors, and will no longer conduct only rote moves; instead they\’ll be driven adaptively. \“In order to understand and grasp objects, robots need to recognize objects and object categories as well as know the pose of objects in six degrees of freedom relative to robot coordinate frames,\“ says Bradski. Both pose and recognition can be obtained in 2D and 3D. To fully grasp that Bradski\’s vision is not one that exists in pure academic research but is rooted in real world application, it\’s helpful to know that Bradski organized the vision team for Stanley, Stanford University\’s autonomously controlled vehicle that won the DARPA Grand Challenge. Stanley used sensors and lasers to gain 2D and 3D perception for planning and control. According to Bradksi, the laser on Stanley was used to teach the vision system, i.e., the laser would find the \“good road\“ and then use the vision system to find more of it. What the vision system captured was segmented into a red, green and blue pixel distribution. This segmented road, composed of bad trajectories and good trajectories, was then integrated into a rolling world model and passed to the vehicle\’s planning and control system. n @Internet – AW: @Internet – AW: the Author

David Greenfield is Director of Content for Automation World. He has been covering industrial technologies, ranging from software and hardware to embedded systems, for more than 20 years. Prior to joining Automation World in June 2011, David was Editorial Director of UBM Electronics\‘ Design News magazine, which covers system and product design engineering. He moved to UBM after serving as Editorial Director of Control Engineering at Reed Business Information (a division of Reed Elsevier Inc.), where he also worked on Manufacturing Business Technology as publisher, several years earlier. In addition, he has held editorial positions at Putman Media and Lionheart Publishing.